From Psychologist to CTO – CTO Talks

Hello, and welcome to another episode of CTO Talks. My name is Dmitriy Stepanov, and I am a Co-Founder and CTO of Glorium Technologies. This video podcast is for technical leaders and those who want to become them someday. And we have guests who possess extensive experience in the area of technology. And tonight's guest is Stephen Johnston, a CTO at PubWise. He has 25 years of experience in online software and a unique perspective on the intersection of humans and technologies. We're going to discuss this later today.

Stephen founded PubWise in 2016 and focused on a machine learning-driven approach to programmatic advertising optimization. Stephen, it's nice to have you on our podcast today. Tell us a little bit about your company.

Content

Thanks for having me on, and looking forward to the discussion. PubWise is a digital advertising optimization company where, in traditional terms, we connect people selling advertising to people buying advertising. And we optimize those communications between them, whether open market bidding, direct transactions, deals, or anything of that nature. We’ve built a management platform for new technology in the space called pre-bid. It's an open-source project that allows many of the auctions and many of those transactions to happen inside the browser.

The change there, largely for the industry, was a lot of information that was previously bottled up inside intermediaries is now available to publishers. And so we built technology and a platform to help publishers leverage that. Primarily, our differentiator is what we call our Smart Path Optimization Technology (SPOT) suite. It's essentially a series of optimizations for these transactions, and we have recently been granted a patent on the client-side portion of that, which does demand path optimization. It’s a lot of decisioning and modification of the configuration of different potential buyers. We're doing that while providing full transparency and observability.

It is really our goal for our publisher. We're taking that trend that's been intact for a while around observability and surfacing important information and only the important information, not just a steady stream of useless data. And we're bringing that into our space and advertising, and you'd probably be surprised by how little information is really provided to publishers historically. They're often involved in the transaction, but all they see is final clearing prices or a check at the end of the month. They don't really see who was involved, why they bought, or the trends over time. And that's what we're working to provide people.

Well, you would think that in 2022 all this information would be readily available and readily used, but I guess…

Yeah, you know, thinking about it, not cynically, it's hard to track this amount of information. And we process 300 billion transactions a month, and each of them outputs a certain amount of data. And it's not just like did it succeed or failed, but it's who participated, what time of day it was, what were their relative bids, and the aspects of the request. So, there are a lot of dimensions with many variabilities, so you can't just sum everything down to these nice, easy aggregated rows.

And so, you know, the challenge for us as a provider is the same as many other observability platforms: you've got the stream of data relevant to the publisher or the person who's turning the knobs, and you know, flipping the switches. And that's where we really started looking at machine learning and saying…

Exactly. So, we can take this and start to break it down into this hierarchy of only people can really get these insights and intuitions, or maybe it takes contracting right like we're not to the point yet where a machine learning algorithm or an AI is going to pick up the phone and do a deal with an external company. Only people could be involved in that. You know you're not yet at the point where AI signs a contract, right? We may get there, but that's something that only people can do.

And so, we look at these big-picture things, and then we start to deconstruct the process and the domain of the problem, and then what is appropriate for machines. I want to extinguish all the business processes that only determine whether one number's bigger than another because that's the space where machine learning should just be doing everything.

I wanted to ask this question at the end, but it's natural to be asked now. Is machine learning ethical? I mean artificial intelligence in general and specifically in advertising because you are taking some personal information and twisting it into the advertising. So, what's your view on this? How do you feel that you are in the middle of this transition and, in general, machine learning? What's your thought about this?

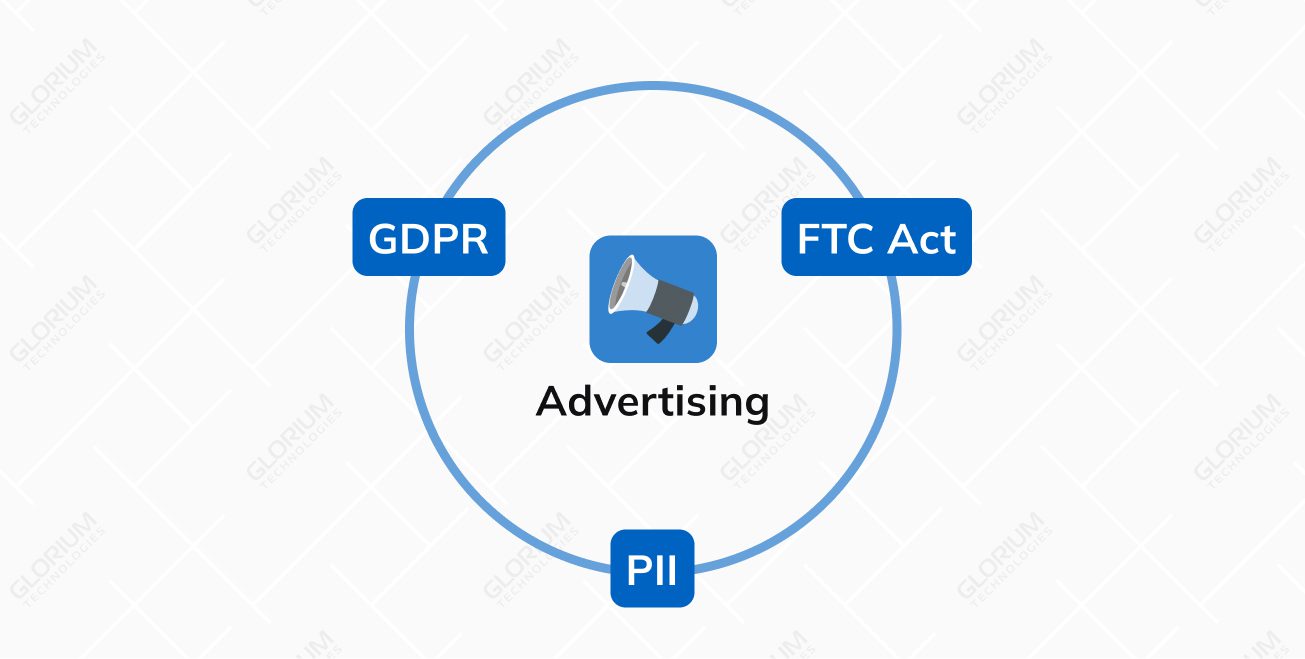

When we started, we were looking at the regulatory space. We were looking at consumer sentiment, and what direction the wind was blowing regarding the kinds of questions you're asking. The question usually arises around the role of data and privacy. And we have focused on building technologies that do not directly rely on user data. We don't involve PII in making our decisions. We don't require special permissions in the context of GDPR and that kind of thing.

So, our decisioning is more about providing the information that other systems may use to do that kind of work and determining which provides value. And I think this is where we get into the conversation of ethics. So, you know, ethics is a sort of right and wrong decision, and I think that in advertising, the core of that has been that people have decided that it is wrong to use a person's data without a fair exchange of value.

And we're looking at publishers and their role in that. I think it's equally wrong for the industry to hoover up all this publisher data and then reuse it over and over and over in the third-party cookie kind of land space without providing value back to the publisher who originated it or to the user who originated it.

I think you can be ethical in the context of all those things. You just have to be aware of where the boundaries are. And it isn't purely a legislative question. It's what consumer sentiment is and where it is going. So, third-party cookies are on the decline, and we will have fewer third-party cookies. I think there will be some tail on them. And they've been what I call the high fructose corn syrup of the ad industry. It goes over everything, you can add it to everything, and it provides some value. Still, I see first-party data and technologies that rely on that actually benefiting ultimately publishers who aren't just going for bigger audiences but are doing are really participating in the value exchange.

And I think that's where the question needs to be. You can't just remove everything right like you could just get rid of advertising, you could get rid of the free and open web, and you're running…

Yeah, I think it's a matter of what technologies allow everyone who's got a stake in that to steward it properly, manage it, and do that sort of thing. And that's where we come into play, ensuring that you're surfacing that data to the companies that provide you value back.

And if we can do that, then as more comes in terms of “we should be tagging it this way, or we should be controlling it this way, or we should be keeping it for this long,” you know, these things that are more sort of processed and legislative oriented. We will be able to rein that, instead of the Wild West that existed with third-party cookies.

It was 1995, the web was new, and I was originally looking at electrical engineering. I got into psychology, but I always had a hobby interest in programming. I mean, I started programming when I was 12 or something. But, for whatever reason, the way that programming or computer science classes were structured and the way the degrees were structured didn't really appeal to me.

So, I looked at psychology as what I wanted to do from a degree perspective. I was interested in psychology. I became interested in test design. Furthermore, I became interested in things around AI and machine learning in a psychological context. I became interested in whether there are future therapies around virtual reality experiences or AI interaction.

I sort of worked through psychology. I was constantly pulled back to these questions of technology and the interactions of humans, you know, in the context of what I was studying. And, you know, I don't think that certainly, as an undergrad, I didn't learn as much about computer science as I could have, right? But I was super interested in technology, so when I started talking to the co-op program, I said, you know, hey, what can I do? It's sort of at this intersection, right? And the answer was that we don't have anything for you.

And so I went to the IT department, then I said, what can I do that's technology-related, and so I'll study psychology as an undergrad. I'll do technology more as a co-op thing, and then I'll find my own way to blend these things. And it took a little while, but I'm back there now, very much blending those things.

I learned about reading research papers. And so, you know, if you read a crypto paper, and it's got some math, and some data in it, or you read an AI, you know, approach to something, those are very similar to psychology research, and that sort of thing.

I don't think so. You know, psychology for me was very natural. It fits into what became, you know, more of almost an entrepreneur kind of lifestyle in college, right? So, I went back to that same, that same co-op program, and they said: we have this problem, we want to place six-month co-ops, but people come to us all the time with six-week projects, and so I said, well, and that's what you notice as far as starting a company. I said, well, why don't you give me the six-week projects, and I'll start a consulting company while I'm in college.

And so I basically started getting, you know, lead generation from the university for these projects so they couldn't face, you know, put full-time students in. That cluster of things was so valuable to what I do now that I wouldn't want to change it.

Well, I mean, I totally understand your point in the sort of direction of your question. And I think that, in general, I might recommend an MBA or a computer science degree for the average person. But I think, and particularly if you want to go from a few years in school to a few years later really starting on this sort of progressive corporate track into larger companies, right, you know, my experience has been startups. It's a much scrappier sort of approach. If you're trying to fit into the expectation of a job market and sort of what they're going to communicate back out to the job market, then clearly, an MBA or computer science degree will align better.

But I think that innovative people are often synthesizers, and they're seeing trends across disparate things. At the intersection of them is where they find innovation and future value that other people haven't seen yet. And so, you know, people along divergent paths of anything may be more likely to stumble across those things.

Absolutely.

I've been through several evolutionary phases of the answer to this question. So if you don't mind me taking a little time to answer that, I think there are almost a couple of different answers.

The first app we grew was pre-cloud, and there was a content management system for gamers in The World of Warcraft gamers’ guilds, clans, and these sorts of things. And at that time, we were growing so fast, we were ordering database hardware ahead of time on these three-month and six-week leads. So, we were there calculating disk usage rates and these kinds of things. And I think that, fortunately, that's probably not the kind of issue that most people will deal with now, particularly when they can leverage cloud technologies.

My first sort of thing, in general, what I learned from that phase and what's still applicable now, is that simplicity is really the starting point. As architects and CTOs, we often have trouble differentiating between actual and manufactured complexity. And actual complexity is that some things are hard. Some things have a set of steps, and we try to abstract these things. And when you abstract some things, you've just removed something, right? An abstraction always removes some sort of fidelity. So, if that process is complex, you can't abstract it away. And if you're doing something interesting and innovative, you will work in some areas with actual complexity. So, you need to refine or remove the areas that don't.

And so, when you do things like, "We're going to start with three different types of data source because there's some sort of, you know, an abstract concept of perfection. Like we're going to go with Memcache and MySQL and, you know, a key store and this and that." And when you add all of this unnecessary complexity, you're taking away from bandwidth to deal with the actual complexity, which is ultimately what your customer wants you to solve. And so, I think that that's the key to sort of getting to the point of scaling because what scaling is then going to do is start to stress those systems and often in ways you didn't predict.

It comes with experience, but, in general, you cannot predict everything.

You can't predict. I think it's easy to point in the general area that something will happen, but its exact nature sometimes is unpredictable. If you're going just to scale disk usage like you're just saying, "Hey, we're going to do file uploads or something," and you can think, "Well, you know, we're going to stress disks." It often ends up being an API between them, right, or some other service. And so you know where the pressure will be, but when you think of it like a river flowing, you don't know exactly which dam will develop along the way or along that critical path. And often, the answer to that evolves over time, so if you keep things simple, I think that's step one.

Okay, keep it simple. I like it, and I actually follow the same stuff.

Yeah, and you know, I think the other thing is, I can talk about it like cadence versus goal. You're building this, and you're building this vision. You've pointed something out in the future, and often the customers you're working with may not be 100% on board yet with what you see. It is three years from now, but they're asking you to solve a near-term problem.

And I think that's the next element related to scaling. Suppose you focus so much on being able to scale this thing, you see three years in the future. Still, you forget the cadence of value delivery related to what your customers are asking you to do daily between now and then. In that case, it's sort of losing track of simplicity. Still, it's more about understanding there has to be a cadence of value delivery that is more fundamental to the customer than to the vision that they may not yet be fully on board with.

Yeah, and that's where I was, you know, that's where I use sort of cadence. And so, we often deconstruct what we need or need to deliver into relative proportions of core, vision, and long-term value and ensure that we're hitting a cadence on all those things.

So yeah, You're describing the struggle of, like, you know, what's a vision or mission of a company. And then, if everybody has a clear idea of why this thing connects to it, that can be your touchstone across what you're talking about. And it's what allows you to say, "Doing this thing related to this core technology is related to the future business value of meeting a customer need that will increase signups x percent."

And when you ask questions in that direction, you get answers in that relationship. You know, and so, um, I think you can train developers to think that way. Yeah, it's, and that's also team size. When you're dealing with a startup with four or five developers, I think you want developers inclined to think that way more. As the team size gets bigger, you have a role for more people who are hyper-focused on delivering a certain thing.

There are thresholds, and if you're talking about one to ten, to a hundred to a million, to a billion, that's like a linear thing. If you're starting at a billion, you may find that you have to go.

I think that when you're talking about scaling product usage where you're talking about consumer purchase (and we did that with Gamer Launch and GameSkinny) then you really can build a prototype, get it going, find the stress points, and that is a perfectly workable solution. And your focus should be on effective and fast, not on perfect. And you know that that is 100 percent the area where perfect is the enemy of good enough.

Sometimes when you're talking about enterprise kind of connectivity where the initial volume is very high, you start having a different starting threshold. And there, you've got to get a little more serious about mapping out how big the volume will be and what stresses are in the system. But you still just end up with another prototype at that scale, and you're still going to end up with another point at which some of those things break down.

And with new cloud technologies, we start with many things where my recommendation to the team is like if we can do this manually, let's do it manually until we prove there's customer value. When we talk about internal processes, there's a lot of inclination to automate early, and I would recommend everyone just put the keyboard down and do it manually. And that's often where companies feel a lot of stress around scaling right as these internal processes.

The problem is, if you automate the wrong process, it's more detrimental than letting humans work through what the process really should be. But we need to understand that once the person gets it right, once the customer is responding to its value, once what you've done manually is getting a good response from the market, that's the time to automate it.

In my opinion, you can approach software development by treating everything as a prototype. This means that even when you reach the pre-scaling phase, you continue to view everything as a prototype. It's important to remove any negative associations with prototyping and instead recognize that everything is a prototype for the next version as your company grows rapidly.

And so we should just say that being a prototype doesn't mean it's not secure, and business continuity isn't considered, and that sort of thing.

Whatever expectations you have about a prototype, production is just more and more restricting them.

OK, let’s move to a CTO. Do you consider yourself a successful CTO?

Yeah, but that's sort of a tough word, “successful.” I've built multiple applications, they've had a good market response, and they've been profitable. I think my goals are massive, and I haven't achieved those massive goals yet. So, it's still a process.

I think that I would focus less on whether I am successful and more on whether I can convey to the people that I'm working with the values that I have and how we build the company and the product. And I have become more successful at that. I think I've become more effective, just telling people the group's expectations. But leadership's tough, and motivating people's tough, and, you know, I'm often on small teams where I'm doing a little bit of development and management, and the push and pull of those two schedules and expectations. It's very difficult and, I think we can always improve. I read a lot of books. We've got a stack of books here that are sort of the current kinds of things.

I'm reading a book by Steven Kotler. It's called "The Art of the Impossible." He talks through high-performance behaviors and many things, like I was describing earlier, about researching a bunch of disparate things and then looking at the edges of them. And like reading a bunch of books and finding topics and then continuing to research those topics. A Wikipedia-style deep dive on the world kind of thing. And then, you know, a lot of times, interesting businesses and interesting concepts exist at the edge of those things.

He talks about flow states and things you can do to get into flow states, the things you can do to increase productivity. I found it interesting. It's one of those books where he describes more systematically some behaviors that I've always kind of done, and he fills in a lot of gaps. If you're already doing these things, what you can add to that to improve productivity.

Yeah, I believe it's important to have a clear set of beliefs and to express optimism about the likelihood of those beliefs becoming reality in the future. However, it's crucial to have a background process in place where you constantly reevaluate new information. That's tough for smart people, it's tough for anybody, but it's sort of this mix of pragmatism and optimism.

I value curiosity, I value a little bit of stubbornness and the old programmer joke about programmers being lazy. I think there is some value in a person who is stubborn, so they'll stick with something, but who values and understands removing those things in the long term, so they can get on to other challenging topics that they're curious about.

And I think that when I was first hiring people, I often tried to hire like against my weaknesses. I'm not very ordered, I tend to be something somewhat abstract, you know. I sort of thrive in chaos, and I used to think what I need to do is find people who are really ordered right, and they'll somehow become the other leg of a stool.

What I've realized recently is that I drive those people crazy. I've come to realize the importance of having people on my team who are able to thrive in chaotic situations. Some things within the company culture are impossible to change, like the saying goes, "you can't change the spots on a zebra." There are certain things that are going to be a part of the culture. Fighting against my inner nature is not fruitful but finding a way to direct that to the goals is where it works.

So, a little bit of a meandering on that in terms of values, but I think pragmatism optimism, and curiosity are the three big ones.

Excellent. Let's go back to what made you successful as a CTO. So, to summarize: your education, receiving a psychology degree, obviously your continuous education. I know that you're continuously studying new technologies, new topics, new books every time new releases, everything is on your table. You said that you started doing development at 11 years old.

I think just, you know, I constantly challenge myself, always learning. But you know, there are a lot of different CTO positions, so I'm really talking primarily about my kind of role in startups. It takes a lot of a lack of fear, you know, in terms of if you're growing something, and you're doing something new. You've got to get over the idea that somewhere out there is some magical person who already knows how to do this. Yes, there are people smarter than you, it's not important to think that you're the smartest person in the room. I'm saying that if you're truly doing something interesting and disruptive, then the reason it's interesting and disruptive is that 100 people haven't done it before.

So, I think it's important to find the people who are doing related things and are doing it in interesting and innovative ways. And so we're talking about observability and machine learning inside of advertising, it isn't being done in the way that we're doing it. It isn't being shown to publishers as much, so I'm always on the lookout for other industries that face similar challenges to ours, and I'm interested in learning about the strategies they use to address them. I believe that one key to success is being able to explore other areas and draw connections between them and our own work. By doing so, we can identify effective tactics and adapt them to our specific needs.

And that's, you know, always been a skill of mine so.

Excellent! Well, I couldn't agree more on this one, actually. I mean, you're literally almost describing my life. [Laughter]

Okay, good. One of the last questions here. Your favorite technology, I don't know, a favorite trend that you keep a close look at right now. I assume that’s something related, I don't know, machine learning, artificial intelligence, or something. What is it? And your curiosity and stubbornness, as you mentioned in the values, I think they fit very well into this puzzle.

So the number one thing that I’m focused on right now is actually basically developer infrastructure.

Developer infrastructure, developer platforms, CI/CD are in the same space. How do you get value to the customer, value to the product faster, more effectively, and more accurately? And we had sort of this Kubernetes. I'm looking at things like Code Spaces from GitHub. It has really changed how we developed, particularly in COVID, but after we're just keeping with it.

We work with large data sets and deal with a lot of customer variability, which can make it challenging to handle everything remotely. However, Code Spaces has been a huge help by providing a shared development environment that allows us to work with remote contractors. Now, new developers can quickly spin up our development environment in just 10 minutes, which has made the process much smoother.

Earlier, you asked about scaling, and there are two components to it. The first one is the rate of growth of customers and the volume they produce. However, when it comes to innovation, the S-curve of adoption is also important. As a company grows, the secondary needs of its user base grow, and these needs have the value that must be vetted. This is often why a team grows, as they focus on second and third-order things alongside the main line of business.

As the company scales, the focus shifts from just handling volume to delivering value to the customer as quickly as possible. This can involve more changes, which need to be controlled, and this is where infrastructure dealing with change in the system becomes important. In my current role, my focus is primarily on this aspect of scaling.

No, I appreciate the opportunity, and you know, enjoy talking about technology stuff.

Bye.